System helps smart devices find their position

A new system developed by researchers at MIT and elsewhere helps networks of smart devices cooperate to find their positions in environments where GPS usually fails.

Today, the “internet of things” concept is fairly well-known: Billions of interconnected sensors around the world—embedded in everyday objects, equipment, and vehicles, or worn by humans or animals—collect and share data for a range of applications.

An emerging concept, the “localization of things,” enables those devices to sense and communicate their position. This capability could be helpful in supply chain monitoring, autonomous navigation, highly connected smart cities, and even forming a real-time “living map” of the world. Experts project that the localization-of-things market will grow to $128 billion by 2027.

The concept hinges on precise localization techniques. Traditional methods leverage GPS satellites or wireless signals shared between devices to establish their relative distances and positions from each other. But there’s a snag: Accuracy suffers greatly in places with reflective surfaces, obstructions, or other interfering signals, such as inside buildings, in underground tunnels, or in “urban canyons” where tall buildings flank both sides of a street.

Researchers from MIT, the University of Ferrara, the Basque Center of Applied Mathematics (BCAM), and the University of Southern California have developed a system that captures location information even in these noisy, GPS-denied areas. A paper describing the system appears in the Proceedings of the IEEE.

When devices in a network, called “nodes,” communicate wirelessly in a signal-obstructing, or “harsh,” environment, the system fuses various types of positional information from dodgy wireless signals exchanged between the nodes, as well as digital maps and inertial data. In doing so, each node considers information associated with all possible locations—called “soft information”—in relation to those of all other nodes. The system leverages machine-learning techniques and techniques that reduce the dimensions of processed data to determine possible positions from measurements and contextual data. Using that information, it then pinpoints the node’s position.

In simulations of harsh scenarios, the system operates significantly better than traditional methods. Notably, it consistently performed near the theoretical limit for localization accuracy. Moreover, as the wireless environment got increasingly worse, traditional systems’ accuracy dipped dramatically while the new soft information-based system held steady.

“When the tough gets tougher, our system keeps localization accurate,” says Moe Win, a professor in the Department of Aeronautics and Astronautics and the Laboratory for Information and Decision Systems (LIDS), and head of the Wireless Information and Network Sciences Laboratory. “In harsh wireless environments, you have reflections and echoes that make it far more difficult to get accurate location information. Places like the Stata Center [on the MIT campus] are particularly challenging, because there are surfaces reflecting signals everywhere. Our soft information method is particularly robust in such harsh wireless environments.”

Joining Win on the paper are: Andrea Conti of the University of Ferrara; Santiago Mazuelas of BCAM; Stefania Bartoletti of the University of Ferrara; and William C. Lindsey of the University of Southern California.

Capturing “soft information”

In network localization, nodes are generally referred to as anchors or agents. Anchors are nodes with known positions, such as GPS satellites or wireless base stations. Agents are nodes that have unknown positions—such as autonomous cars, smartphones, or wearables.

To localize, agents can use anchors as reference points, or they can share information with other agents to orient themselves. That involves transmitting wireless signals, which arrive at the receiver carrying positional information. The power, angle, and time-of-arrival of the received waveform, for instance, correlate to the distance and orientation between nodes.

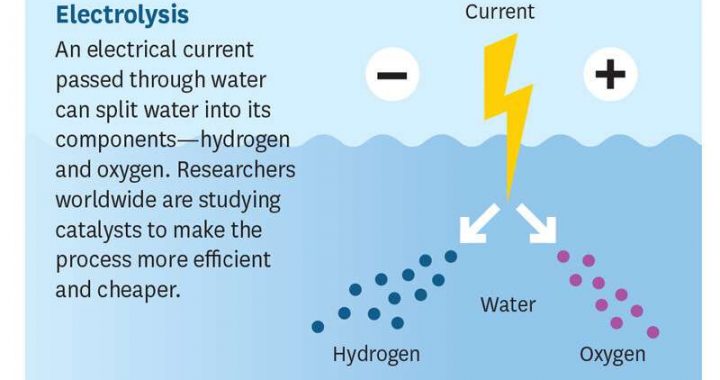

Traditional localization methods extract one feature of the signal to estimate a single value for, say, the distance or angle between two nodes. Localization accuracy relies entirely on the accuracy of those inflexible (or “hard”) values, and accuracy has been shown to decrease drastically as environments get harsher.

Say a node transmits a signal to another node that’s 10 meters away in a building with many reflective surfaces. The signal may bounce around and reach the receiving node at a time corresponding to 13 meters away. Traditional methods would likely assign that incorrect distance as a value.

For the new work, the researchers decided to try using soft information for localization. The method leverages many signal features and contextual information to create a probability distribution of all possible distances, angles, and other metrics. “It’s called ‘soft information’ because we don’t make any hard choices about the values,” Conti says.

The system takes many sample measurements of signal features, including its power, angle, and time of flight. Contextual data come from external sources, such as digital maps and models that capture and predict how the node moves.

Back to the previous example: Based on the initial measurement of the signal’s time of arrival, the system still assigns a high probability that the nodes are 13 meters apart. But it assigns a small possibility that they’re 10 meters apart, based on some delay or power loss of the signal. As the system fuses all other information from surrounding nodes, it updates the likelihood for each possible value. For instance, it could ping a map and see that the room’s layout shows it’s highly unlikely both nodes are 13 meters apart. Combining all the updated information, it decides the node is far more likely to be in the position that is 10 meters away.

“In the end, keeping that low-probability value matters,” Win says. “Instead of giving a definite value, I’m telling you I’m really confident that you’re 13 meters away, but there’s a smaller possibility you’re also closer. This gives additional information that benefits significantly in determining the positions of the nodes.”

Reducing complexity

Extracting many features from signals, however, leads to data with large dimensions that can be too complex and inefficient for the system. To improve efficiency, the researchers reduced all signal data into a reduced-dimension and easily computable space.

To do so, they identified aspects of the received waveforms that are the most and least useful for pinpointing location based on “principal component analysis,” a technique that keeps the most useful aspects in multidimensional datasets and discards the rest, creating a dataset with reduced dimensions. If received waveforms contain 100 sample measurements each, the technique might reduce that number to, say, eight.

A final innovation was using machine-learning techniques to learn a statistical model describing possible positions from measurements and contextual data. That model runs in the background to measure how that signal-bouncing may affect measurements, helping to further refine the system’s accuracy.

The researchers are now designing ways to use less computation power to work with resource-strapped nodes that can’t transmit or compute all necessary information. They’re also working on bringing the system to “device-free” localization, where some of the nodes can’t or won’t share information. This will use information about how the signals are backscattered off these nodes, so other nodes know they exist and where they are located.